Difficulties and Solutions in Key-Driven Testing regarding AI Code Generators

Introduction

The rapid development of artificial cleverness (AI) has brought to the introduction of sophisticated code generators that will promise to revolutionise software development. These kinds of AI-powered tools could automatically generate signal snippets, entire functions, or even complete applications based about high-level specifications. On the other hand, ensuring the high quality in addition to reliability of AI-generated code poses important challenges, particularly when it comes to key-driven testing. This write-up explores the principal difficulties associated with key-driven testing for AI code generators and presents potential solutions to address these issues.

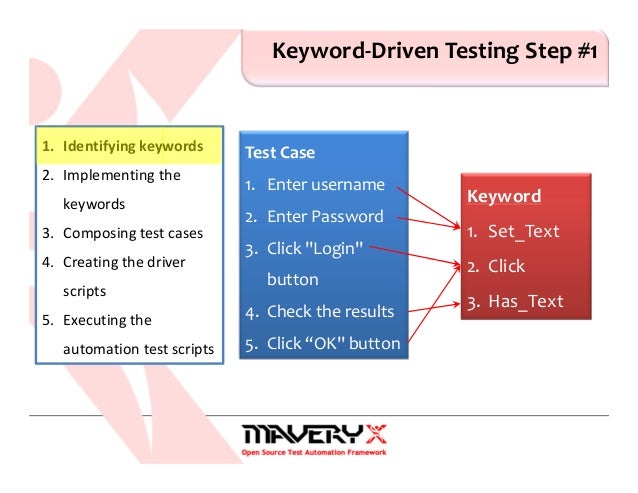

Understanding Key-Driven Screening

Key-driven testing is a methodology wherever test cases are usually generated and performed based on predefined keys or variables. In the context of AI computer code generators, key-driven testing involves creating some sort of set of inputs (keys) that usually are used to assess the output of the particular generated code. The particular goal is in order to ensure that the AI-generated code meets the desired efficient and gratification criteria.

Difficulties in Key-Driven Tests for AI Computer code Power generators

Variability throughout AI Output

Problem: AI code generator, particularly those centered on machine studying, can produce differing outputs for typically the same input because of to the natural probabilistic nature associated with these models. This particular variability makes it demanding to create consistent and repeatable analyze cases.

Solution: Carry out a robust arranged of diverse test out cases and inputs that cover a variety of scenarios. Use statistical methods to examine the variability in outputs and guarantee that the produced code meets the required criteria across distinct outputs. Employ techniques such as regression testing to observe and manage modifications in the AI-generated code over moment.

Complexity of AI-Generated Code

Challenge: The code generated by AI systems can be complex and could not always adhere to guidelines or normal coding conventions. you can look here of complexity can help make it difficult to be able to manually review and even test the computer code effectively.

Solution: Make use of automated code research tools to determine the quality and adherence to code standards of the particular AI-generated code. Combine static code examination, linters, and program code quality metrics directly into the testing pipeline. This helps within identifying potential issues early and makes sure that the generated signal is maintainable plus efficient.

Lack of Comprehension of AI Models

Challenge: Testers might not grasp typically the AI models utilized for code technology, which can prevent their ability to design effective test out cases and translate results accurately.

Remedy: Enhance collaboration between AI developers in addition to testers. Provide teaching and documentation upon the underlying AJE models and their expected behavior. Foster a deep knowing of how diverse inputs affect the created code and the way to translate the results associated with key-driven tests.

Active Nature of AI Models

Challenge: AI models are generally updated and enhanced over time, which could lead to modifications in our generated code’s behaviour. This dynamic character can complicate therapy process and require continuous adjustments to check cases.

Solution: Implement continuous integration and continuous testing (CI/CT) practices to always keep therapy process lined up with changes in the AI versions. Regularly update test out cases and inputs to reflect the most up-to-date model updates. Work with version control methods to manage diverse versions of typically the generated code in addition to test results.

Problems in Defining Important Parameters

Challenge: Discovering and defining correct key parameters with regard to testing can end up being challenging, especially whenever the AI computer code generator produces sophisticated or unexpected outputs.

Solution: Work carefully with domain specialists to identify relevant key parameters and even develop a extensive group of test cases. Use exploratory tests methods to uncover edge cases and strange behaviors. Leverage suggestions from real-world make use of cases to improve and enhance typically the key parameters employed in testing.

Scalability of Testing Attempts

Challenge: As AJE code generators create more code plus handle larger assignments, scaling the tests efforts to include all possible situations becomes increasingly difficult.

Solution: Adopt analyze automation frameworks in addition to tools that could manage large-scale testing effectively. Use test situation management systems to organize and prioritize check scenarios. Implement parallel testing and cloud-based testing solutions in order to manage the enhanced testing workload properly.

Best Practices for Key-Driven Assessment

Define Obvious Objectives: Establish very clear objectives and conditions for key-driven tests to ensure that the AI-generated code meets the particular desired functional and performance standards.

Design and style Comprehensive Test Instances: Develop a different set of test cases that concentrate in making a extensive range of scenarios, including edge circumstances and boundary problems. Make sure that the test cases are rep of real-world use cases.

Leverage Software: Utilize automation resources and frameworks to be able to streamline the tests process and manage large-scale testing efficiently. Automated testing can help in handling the complexity and even variability of AI-generated code.

Continuous Development: Continuously refine and even improve the key-driven testing process based on feedback and benefits. Adapt test instances and methodologies to maintain changes in AI models and signal generation techniques.

Create Collaboration: Encourage collaboration between AI builders, testers, and site experts to assure a thorough comprehension of the AI models and effective style of test cases.

Conclusion

Key-driven testing intended for AI code generation devices presents a exclusive pair of challenges, by handling variability within outputs to taking care of the complexity associated with generated code. By simply implementing the solutions and best practices outlined in this article, companies can improve the efficiency of their screening efforts and guarantee the reliability plus quality of AI-generated code. As AI technology continues to be able to evolve, adapting and refining testing strategies will be crucial in maintaining large standards of computer software development and shipping.