Guidelines for Implementing State of mind Testing in AI Code Generation

In typically the realm of AJE and machine learning, code generation provides emerged as the powerful tool, enabling developers to automatically produce code snippets, algorithms, and actually entire applications. When this technology claims efficiency and innovation, it also presents unique challenges of which must be tackled to ensure code quality and trustworthiness. check out the post right here of maintaining high standards in AJE code generation is definitely implementing effective sanity testing. This article explores best practices with regard to implementing sanity testing in AI program code generation, providing insights into how to make certain that AI-generated code meets the needed standards.

What exactly is State of mind Testing?

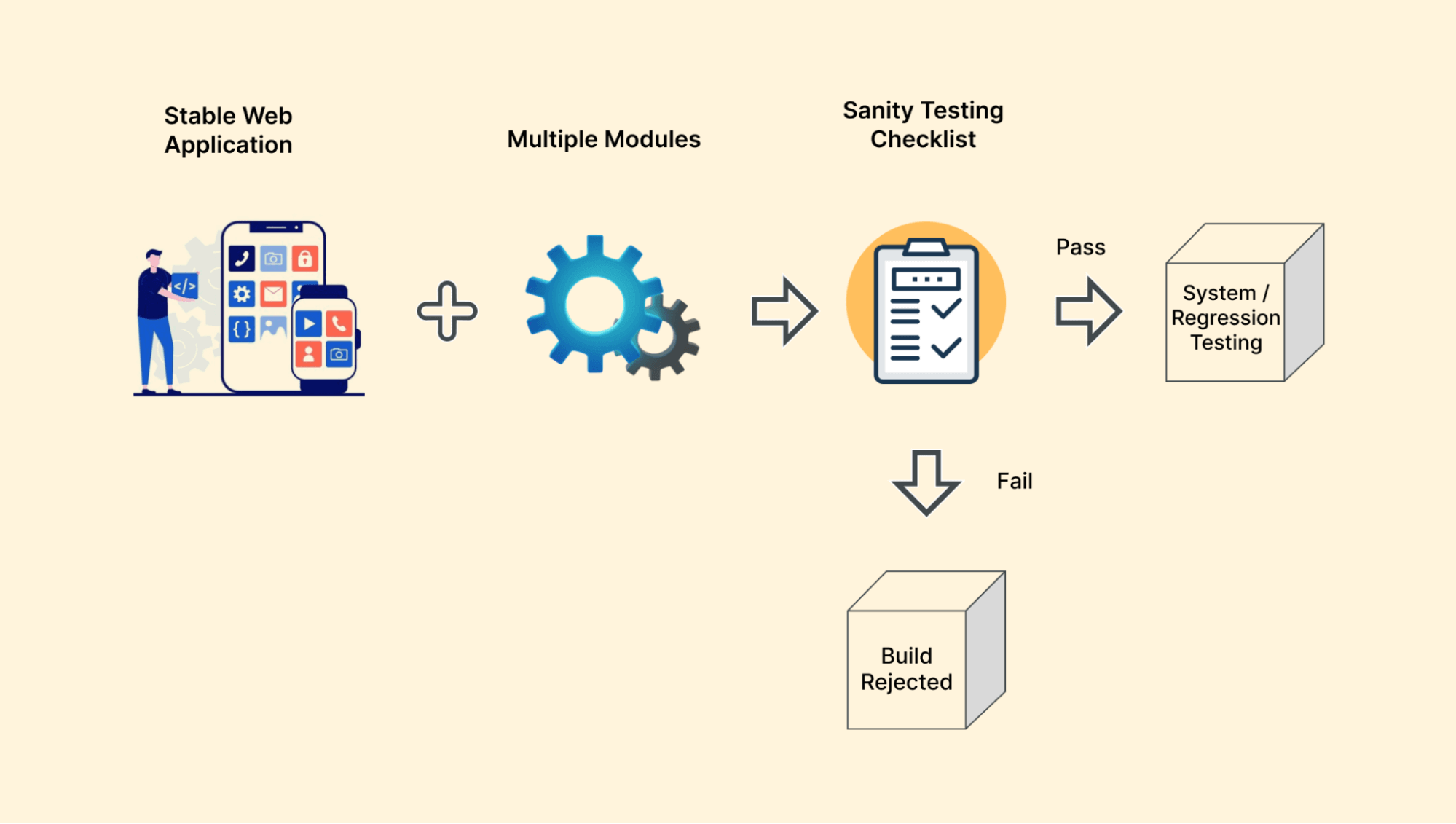

Sanity assessment, a subset involving software testing, is built to verify that a new particular function or element of a method is working effectively after changes or updates. Unlike complete testing approaches of which cover a extensive range of scenarios, sanity testing focuses in validating the essential functionality and ensuring that the most crucial elements of the applying are usually operating not surprisingly. Inside the context of AI code generation, sanity testing aims to ensure that the particular generated code will be functional, error-free, in addition to meets the first requirements.

Why Sanity Testing is important regarding AI Code Technology

AI code era systems, like all those based on machine learning models, will produce code with different degrees of precision. Due to the inherent difficulties and unpredictability of AI models, the particular generated code may contain bugs, reasonable errors, or unintentional behavior. Implementing state of mind testing helps in order to:

Ensure Basic Operation: Verify that this AI-generated code performs their intended functions.

Recognize Major Issues Earlier: Detect significant errors or failures just before they escalate.

Confirm Integration Points: Assure that the developed code integrates appropriately with existing techniques or components.

Enhance Reliability: Enhance typically the overall reliability and stability of the particular generated code.

Best Practices for Putting into action Sanity Testing inside AI Code Era

Define Clear Needs and Expectations

Before initiating sanity assessment, it’s essential in order to define clear specifications and expectations regarding the AI-generated program code. This includes specifying the desired operation, performance metrics, plus any constraints or perhaps limitations. Having clear criteria helps guarantee that the sanity tests are aligned with the designed goals of typically the code generation procedure.

Establish a Primary for Comparison

In order to effectively evaluate the particular performance of AI-generated code, establish the baseline for comparability. This can end up being created by generating signal using known advices and comparing typically the results with anticipated outputs. The base helps to recognize deviations from expected behavior and assess the quality of the particular generated code.

Handle Testing Processes

Motorisation is vital to efficient and consistent sanity testing. Develop automatic test suites of which cover the critical functionalities from the AI-generated code. Automated assessments can be work frequently and regularly, providing rapid comments and identifying concerns early in typically the development cycle.

Integrate Test Cases for Common Scenarios

Consist of test cases that cover common cases and edge situations relevant to typically the generated code. This particular ensures that the code performs effectively under various problems and handles different inputs gracefully. Analyze cases should furthermore cover boundary situations and potential error scenarios to confirm robustness.

Monitor Functionality Metrics

Sanity tests should include performance metrics to determine the efficiency of the AI-generated computer code. Monitor aspects these kinds of as execution moment, memory usage, plus resource consumption to ensure that the code meets performance expectations. Functionality testing helps discover potential bottlenecks plus optimize code effectiveness.

Validate Integration with Existing Systems

In case the AI-generated code is intended to integrate with existing systems or components, it’s critical to test the incorporation points. Ensure that will the generated computer code interacts correctly using other modules and even maintains compatibility using existing interfaces and even protocols.

Review plus Refine Testing Processes

Regularly review and even refine sanity screening procedures based on feedback and observations. Analyze test results to identify recurring issues or habits and adjust check cases accordingly. Ongoing improvement of testing procedures helps enhance the effectiveness regarding sanity testing as time passes.

Implement Feedback Loops

Establish feedback spiral between the AI code generation method along with the sanity tests phase. Use ideas from testing effects to refine typically the AI models plus improve the high quality of the created code. Feedback spiral help create some sort of more iterative and even adaptive development procedure.

Ensure Comprehensive Working and Confirming

Keep comprehensive logging plus reporting mechanisms intended for sanity testing. In depth logs and information provide valuable information for diagnosing problems, tracking test outcomes, and identifying developments. Clear documentation of test cases, benefits, and any detected issues facilitates far better analysis and decision-making.

Collaborate with Domain Experts

Collaborate together with domain experts who can provide observations into specific needs and nuances related to the generated signal. Their expertise helps ensure that the sanity testing process details domain-specific concerns in addition to meets the anticipated standards of quality.

Consider User Suggestions

Whenever possible, involve clients or stakeholders in the testing procedure to gather suggestions on the AI-generated code. User opinions provides additional viewpoints on functionality, functionality, and overall pleasure, causing a more comprehensive evaluation associated with the code.

Adopt a Continuous Tests Method

Integrate sanity testing into a new continuous testing method, where tests usually are conducted regularly through the development pattern. Continuous testing ensures that issues are discovered and addressed promptly, reducing the danger of defects gathering over time.

Conclusion

Implementing sanity testing in AI code generation is vital for ensuring that will the generated code meets functional, performance, and reliability specifications. By following guidelines such as identifying clear requirements, automating testing processes, and incorporating feedback loops, developers can successfully validate the good quality of AI-generated program code and address prospective issues early in the development cycle. While AI technology is constantly on the advance, maintaining powerful testing practices will be crucial for taking the full potential of code era while ensuring the delivery of superior quality, reliable software.