Difficulties and Solutions throughout Incremental Testing for AI Code Generators

Introduction

In the rapidly evolving field involving artificial intelligence (AI), code generators have got emerged as strong tools that could automatically produce signal based on high-level specifications. While these types of AI-driven solutions promise increased productivity and even efficiency in software development, they furthermore introduce unique problems, particularly if it will come to testing. Incremental testing, an approach where tests are usually added progressively while code is developed, is important for ensuring the reliability of AI code power generators. This informative article explores the challenges associated with incremental testing with regard to AI code generator and offers sensible solutions to address problems.

Understanding Pregressive Tests

Incremental screening involves the constant addition of new tests to ensure freshly written code functions correctly without introducing regressions. This method is particularly important for AI code generator, as the generated program code can evolve dynamically in line with the AI’s mastering process. Incremental assessment assists with identifying bugs early, ensuring that changes in the code do not necessarily break existing operation.

Challenges in Gradual Testing for AJE Code Generation devices

Intricacy of AI-Generated Code

AI code generators, particularly those based on deep studying models, can produce highly complex and varied code. This kind of complexity makes this challenging to make comprehensive test cases that will cover all feasible scenarios. The created code might consist of unexpected patterns or even structures that were not anticipated in the course of the testing stage, leading to spaces in test insurance coverage.

Solution: To handle this challenge, test generation strategies can be employed. As an example, utilizing automated check generation tools that will analyze the construction of the AI-generated code and produce test cases accordingly can help throughout managing complexity. Additionally, incorporating formal approaches and static analysis tools can supply a deeper understanding of the code’s behavior, facilitating even more effective testing.

Powerful Nature of AJE Models

AI designs are inherently powerful; they adapt and change based on the particular data they may be skilled on. This dynamism means that the particular AI code generator’s behavior can vary with various training datasets or updates in order to the model. Gradual testing must as a result be adaptable to these changes, which can complicate the screening process.

Solution: Implementing version control with regard to AI models can easily help manage changes effectively. By checking model versions in addition to their corresponding results, testers can far better understand the effect of updates about generated code. Furthermore, building useful source set of baseline tests that cover the particular core functionality with the code generator can offer a stable guide point for assessing changes.

Integration plus Interface Problems

AI code generators often produce code that needs to always be integrated with existing systems or cadre. Ensuring that the generated code interacts correctly with various other components can be challenging, especially when working with incremental modifications. Integration issues can result in failures that are difficult to diagnose in addition to fix.

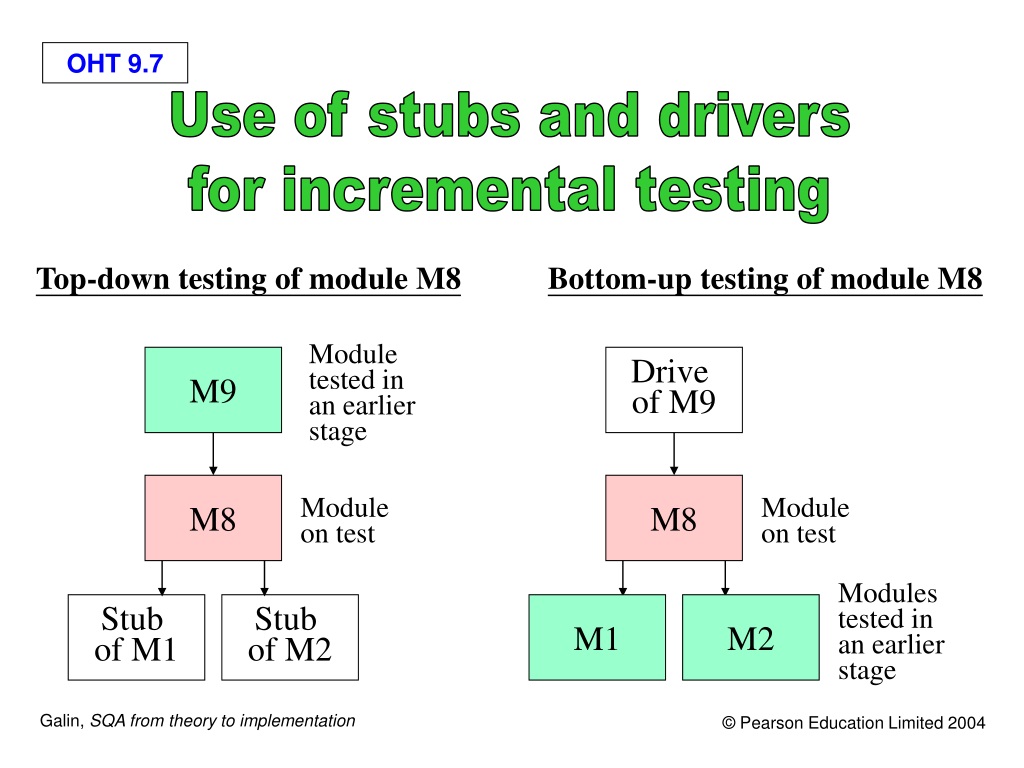

Solution: To mitigate integration issues, adopting a modular testing approach could be beneficial. Testing individual modules or perhaps components of the particular AI-generated code within isolation before developing them with additional parts in the system can help recognize and address issues early. Additionally, employing integration testing frames that simulate real-life interactions can provide more comprehensive protection.

Evolving Specifications and Demands

The technical specs and requirements regarding software projects can easily evolve over period, especially in agile development environments. AI code generators should adapt to these types of changing requirements, which can cause mistakes between the developed code and the particular current project requirements.

Solution: Maintaining a flexible and adaptive testing framework is usually crucial. Regularly upgrading test cases to reflect changes in project requirements guarantees that the testing remain relevant. Collaboration between developers, testers, and AI technicians is also important to keep the assessment process aligned along with evolving specifications.

Absence of Contextual Comprehending

AI code power generators often lack a new deep comprehension of the particular broader context throughout which the signal to be used. This shortage of contextual recognition can result throughout code that, although technically correct, may possibly not satisfy the practical needs with the application.

Solution: Incorporating site knowledge in the assessment process will help tackle contextual issues. Working together with domain professionals to review typically the AI-generated code and give feedback can guarantee how the generated computer code meets the functional requirements of the application. Additionally, customer acceptance testing (UAT) can provide information into how effectively the code aligns with user requirements.

Resource Constraints

Incremental testing can always be resource-intensive, requiring considerable computational power and even time. For AJE code generators, the particular sheer volume of code as well as the intricacy of the assessments can strain sources, particularly in constant integration and application (CI/CD) pipelines.

Solution: To manage reference constraints, optimizing the particular testing process is definitely key. This may include prioritizing testing according to their effect and likelihood of detecting issues, while well as utilizing cloud-based testing infrastructure which could scale as needed. Efficient analyze automation and parallel execution can in addition help reduce assessment time and reference usage.

Best Practices for Incremental Assessment of AI Program code Generators

Automate Screening Procedures

Automating test creation, execution, and reporting can substantially enhance the performance of incremental screening. Automated testing tools can quickly determine issues in AI-generated code and give immediate feedback to be able to developers.

Adopt Continuous Testing

Integrating gradual testing in to the CI/CD pipeline ensures that computer code is tested consistently throughout the advancement lifecycle. This approach helps in catching issues early and maintaining if you are a00 of code quality.

Enhance Analyze Insurance coverage

Focus in creating comprehensive check suites that cover a new wide range of scenarios, including advantage cases and mistake conditions. Regularly assessment and update test cases to ensure they remain appropriate as being the AI code generator evolves.

Use Mock Data in addition to Simulations

Employ make fun of data and ruse to try the AI-generated code in different conditions. This method helps in evaluating precisely how well the signal performs under various scenarios and can easily provide insights straight into potential issues.

Foster Effort

Encourage cooperation between AI designers, developers, and testers to ensure a distributed comprehension of the computer code generator’s functionality and requirements. Collaborative attempts can lead to more effective testing strategies and better identification associated with issues.

Monitor plus Analyze Test Effects

Continuously monitor and even analyze test results to identify patterns in addition to recurring issues. Inspecting test data can help in understanding the particular impact of modifications and improving the overall quality of the AI-generated code.

Conclusion

Incremental testing intended for AI code generators presents several problems, from the complexity of generated signal to the dynamic character of AI types. However, by employing effective solutions in addition to best practices, these issues may be managed successfully. Automated testing, continuous integration, and cooperation are key tactics for ensuring the reliability of AJE code generators. While AI technology continues to advance, handling these challenges will be crucial for harnessing the full potential of AI-driven computer code generation and preserving high standards associated with software quality.